I decreased my internet service a few months back, from 900Mbps to 200Mbps. Now, websites can take an excruciatingly long time to load, HD YouTube videos have to halt and buffer when I bounce around in them, and video conversations can be horribly choppy.

In other words, very nothing has changed. I experienced the same issues even when I had near-gigabit download service, and I’m sure I’m not alone. I’m sure many of you have similarly cursed a slow-loading page and been even more perplexed when a “speed test” suggests your internet should be able to play hundreds of 4K Netflix streams at once.

So, what’s the deal?

As with any problem, there are several elements at work. But one of the most important is latency, or the amount of time it takes for your device to transmit data to a server and get data back – it doesn’t matter how much bandwidth you have if your packets (the little bundles of data that move across the network) get stuck someplace. While popular speed tests, like as the “ping” measure, have given consumers a sense of how latency works, standard techniques of measuring it haven’t always presented a whole picture.

But before we go into L4S, we should build some groundwork

The good news is that there is a strategy in the works to almost remove latency, and major corporations such as Apple, Google, Comcast, Charter, Nvidia, Valve, Nokia, Ericsson, T-Mobile parent firm Deutsche Telekom, and others have expressed interest. It’s a new internet standard called L4S that was finalised and released in January, and it has the potential to significantly reduce the amount of time we spend waiting for webpages or streams to load, as well as reduce problems in video chats. It might also assist to revolutionise the way we think about internet speed and enable developers to design applications that are just not viable with the existing internet reality.

Why is my internet connection so slow?

There are several possible explanations. The internet is a large network of interconnected routers, switches, fibres, and other devices that connect your device to a server (or, in many cases, numerous servers) someplace. If there is a backlog along the way, your surfing experience may suffer. And there are numerous potential bottlenecks: the server hosting the video you want to watch may have limited upload capacity, a critical component of the internet’s infrastructure may be down, causing data to travel further to reach you, your computer may be struggling to process the data, and so on.

The real kicker is that the chain’s lowest capacity dictates the bounds of what’s conceivable. You may be connected to the fastest server possible over an 8Gbps connection, but if your network can only handle 10Mbps at a time, you’ll be restricted to that. Oh, and every delay adds up, so if your computer adds 20 milliseconds and your router adds 50 milliseconds, you’ll be waiting at least 70 milliseconds for something to happen. (These are random examples, but you get the idea.)

In recent years, network engineers and academics have expressed worries about how traffic control technologies designed to save network equipment from becoming overloaded may instead slow things down. Part of the issue is referred to as “buffer bloat.”

That reminds me of a zombie foe from The Last of Us

Right? To grasp what buffer bloat is, we must first understand what buffers are. As we’ve already mentioned, networking is a bit of a dance; each component of the network (such as switches, routers, modems, and so on) has its own data limit. However, because the network devices and the amount of traffic they must deal with are always changing, none of our phones or computers actually know how much data to transfer at a moment.

To determine this, they will typically begin delivering data at a single pace. If all goes well, they’ll keep increasing it until anything goes wrong. Traditionally, packets are dropped when a router someplace receives data quicker than it can send it out and says, “Oh no, I can’t handle this right now,” and just discards it. Very understandable.

While missed packets do not usually result in data loss — we’ve made computers clever enough to just send those packets again if required — it’s still not ideal. So, when the sender receives notification that packets have been lost, it momentarily reduces its data rates before instantly ramping up again in case things have altered in the last few milliseconds.

That’s because sometimes the data overload that causes packets to fail is very momentary; for example, perhaps someone on your network is attempting to transmit a photo on Discord, and if your router could simply hang on until that passes through, you could continue your video conversation without interruption. This is also one of the reasons why many networking devices have buffers. If a device receives too many packets at once, it can temporarily store them before sending them out in a queue. This allows systems to manage huge volumes of data while smoothing out surges of traffic that may otherwise create issues.

I’m not sure what you mean – it sounds like a nice thing

It is! However, some individuals are concerned that buffers have become excessively large in order to ensure that things function smoothly. As a result, packets may have to pause for a (sometimes literal) second before continuing on their route. For some forms of traffic, this isn’t a problem; YouTube and Netflix both have buffers on your device, so you don’t need the next part of video right now. However, if you’re on a video conference or utilising a game streaming service like GeForce Now, the delay created by a buffer (or multiple buffers in the chain) might be a serious issue.

There are several current solutions, and there have been numerous attempts in the past to create algorithms that regulate congestion with an eye towards both throughput (or how much data is delivered) and decreased latency. However, many of them do not play well with the present commonly used congestion control techniques, which might imply that implementing them for certain portions of the internet will harm others.

How can I have latency difficulties while I pay for gigabit internet?

This is an internet service provider, or ISP, marketing ploy. When people say they want “faster” internet, they imply they want less time between when they ask for something and when they get it. However, internet service providers charge for connections based on their capacity: how much data can you suck back at once?

There was a time when increasing capacity really reduced the amount of time you spent waiting. On 56 kilobit per second dial-up, downloading a nine-megabyte MP3 file from a completely legal website will take a long time — roughly 21 and a half minutes. If you upgrade to a super-fast 10Mbps connection, you should get the music in less than 10 seconds.

However, as throughput increases, the time it takes to transfer data becomes less obvious; you wouldn’t notice the difference between a music download that takes 0.72 seconds on 100Mbps and one that takes 0.288 seconds on 250Mbps, even though it’s theoretically less than half the time. (Also, in actuality, it takes longer than that because the process of downloading a music doesn’t only include transferring the data). When downloading larger files, the numbers count more, but you still reach diminishing returns at some point; the difference between streaming a 4K movie 30 times quicker than you can watch it vs five times faster than you can view it isn’t really significant.

The disparity between our internet “speed” (usually referred to as throughput — the question is less about how fast the delivery truck is going and more about how much it can carry on the trip) and how we experience those high-bandwidth connections becomes apparent when simple webpages take a long time to load; in theory, we should be able to load text, images, and javascript at lightning speeds.

However, loading a webpage requires numerous rounds of back-and-forth communication between our devices and servers, which increases latency. When packets are stopped for 25 milliseconds, it adds up quickly when they have to make the voyage 10 or 20 times. The quantity of data we can transport via our internet connection at one moment isn’t the bottleneck; it’s the time our packets spend bouncing between devices. So increasing capacity isn’t going to help.

So, what exactly is L4S, and how will it improve my internet speed?

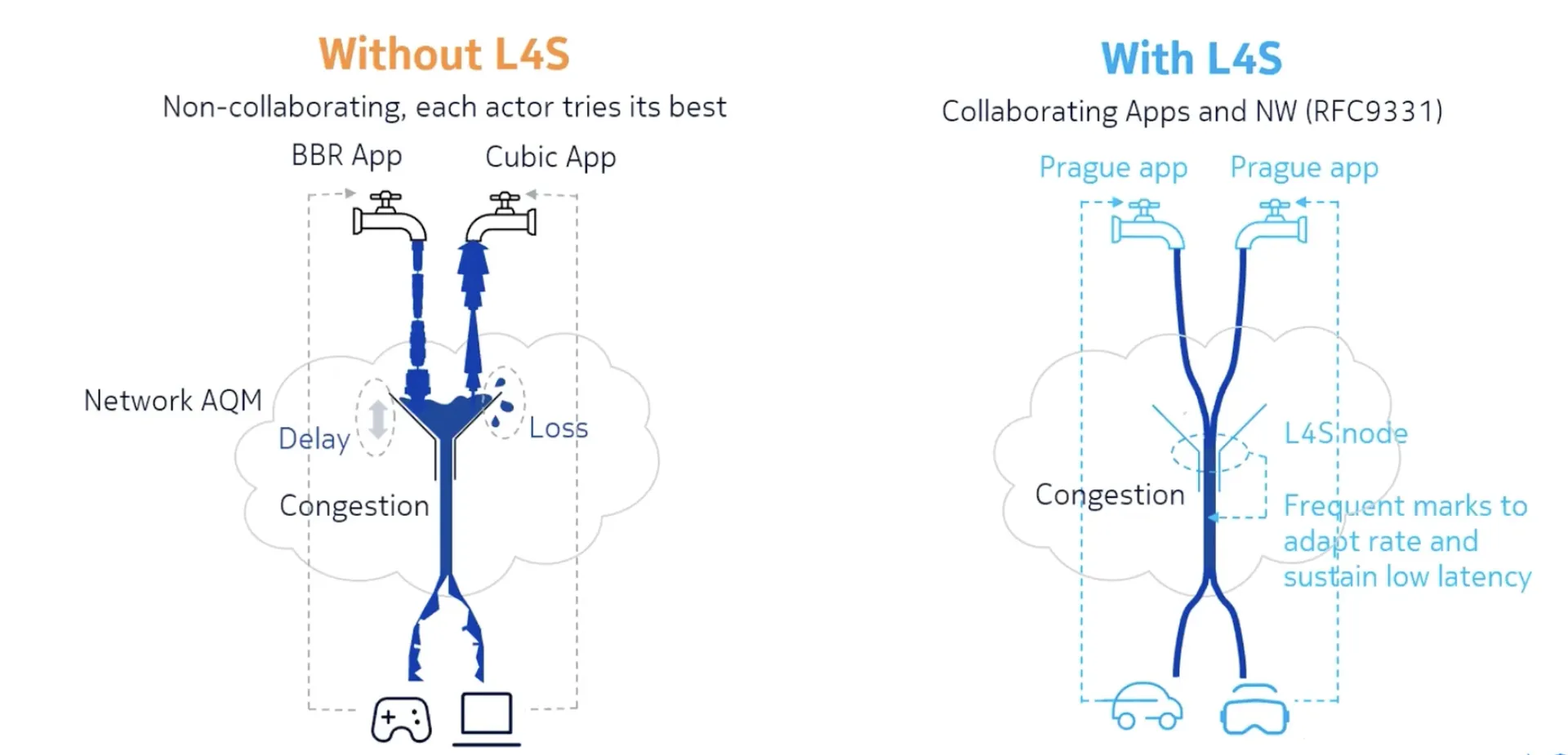

L4S stands for Low Latency, Low Loss, Scalable Throughput, and its purpose is to reduce the requirement for queuing so that your packets spend as little time as possible waiting in line. To do this, it attempts to shorten the latency feedback loop; when congestion occurs, L4S ensures that your devices are aware of it very instantly and may begin taking action to resolve the issue. Typically, this involves reducing the amount of data they transfer significantly.

As previously discussed, our gadgets are continually speeding up, then slowing down, and repeating that cycle because the quantity of data that network links must deal with changes all the time. However, losing packets isn’t a good indication, especially when buffers are involved – your device won’t realise it’s transmitting too much data until it’s sending much too much data, which means it needs to clamp down hard.

L4S, on the other hand, eliminates the time lag between the onset of a problem and each device in the chain becoming aware of it. This makes it simpler to maintain a high data throughput without increasing latency, which lengthens the time it takes for data to be sent.

Okay, but how does it accomplish this? Is it a spell?

No, it’s not magic, though it’s technically difficult enough that I wish it was so I could simply wave it away. If you’re really interested (and you’re familiar with networking), you may read the specification document on the Internet Engineering Task Force’s website.

For everyone else, I’ll attempt to condense it as much as possible without glossing over too much. The L4S standard adds an indication to packets that indicates if they encountered congestion when travelling from one device to another. There is no problem if they sail straight through, and nothing occurs.

However, if they have to wait in a queue for an extended period of time, they are noted as having encountered congestion. As a result, the devices may begin making modifications right once to prevent the congestion from worsening and, perhaps, to eradicate it entirely. This maintains the data flowing as fast as possible while eliminating the disturbances and mitigations that might cause delay in other systems.

Is L4S required?

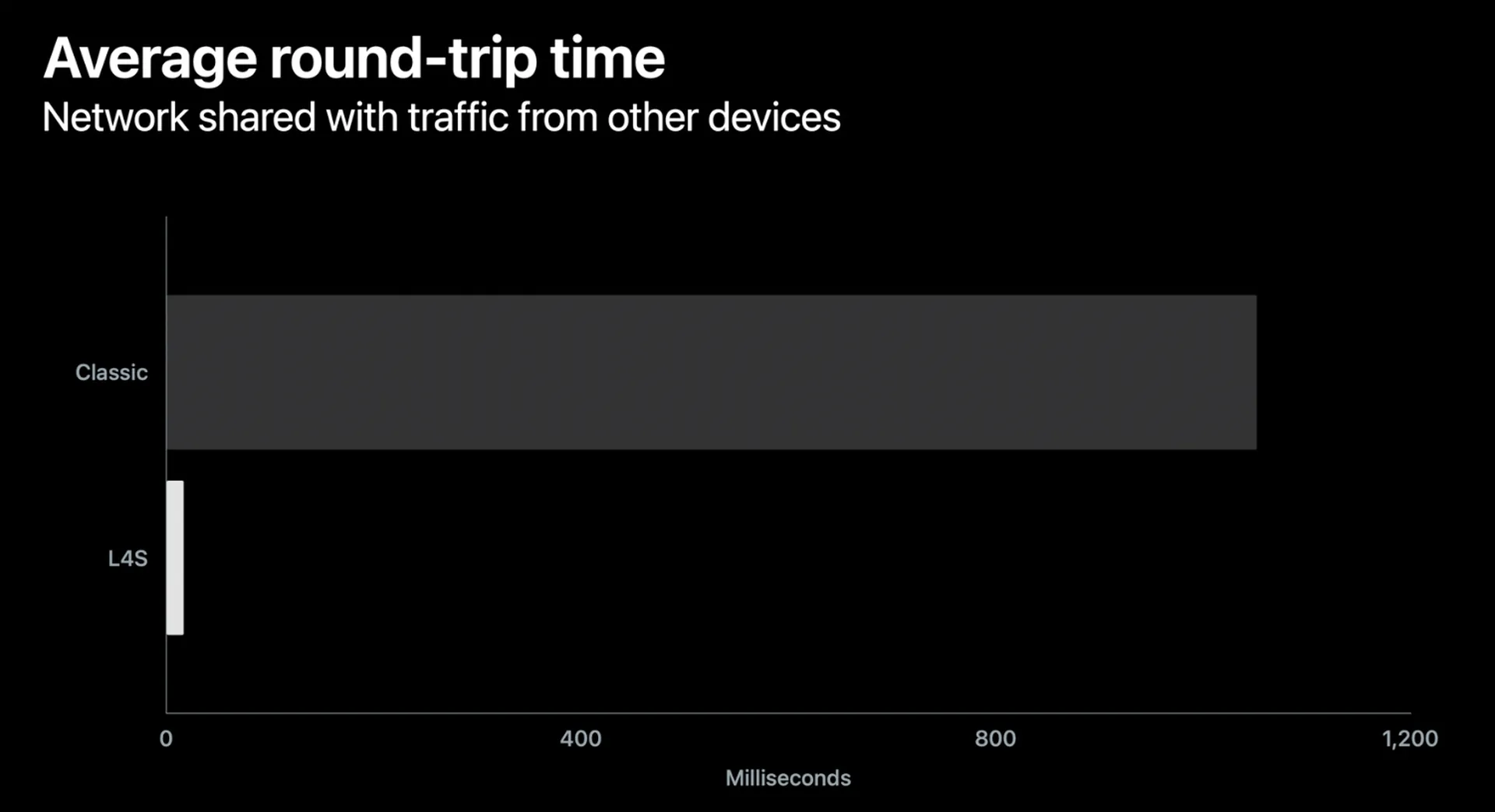

In terms of lowering internet latency, L4S or anything similar is “a pretty necessary thing,” according to Greg White, a technologist at research and development firm CableLabs who worked on the standard. “This buffering delay has typically ranged from hundreds to thousands of milliseconds in some cases.” Some prior buffer bloat remedies reduced this to tens of milliseconds, whereas L4S reduces it to single-digit milliseconds.”

That might clearly improve the overall experience of accessing the internet. “These days, most people’s web browsing is limited by roundtrip time rather than connection capacity.” Beyond roughly six to ten megabits per second, latency becomes more important in determining how quickly a web page loads.”

However, ultra-low latency may be critical for future use cases. We’ve already discussed game streaming, which can be a nightmare if there’s too much latency, but picture attempting to stream a VR game. In such situation, excessive latency may cause you to vomit as well as make the game less enjoyable to play.

What is it that L4S cannot do?

It can’t, after all, change the rules of physics. Data can only go so fast, and it occasionally has to travel a vast distance. For example, if I tried to have a video conversation with someone in Perth, Australia, there would be at least 51ms of latency each way – that’s how long it takes light to travel in a straight line from where I live to there, assuming it’s in a vacuum. In reality, it will take a little longer. Because there isn’t a direct route from my place to Perth, light travels a little slower through fibre optic cables, and data would take a few more hops along the way.

This is why, if you’re not dealing with real-time data, most services will aim to cache it closer to where you live. If you’re viewing something popular on Netflix or YouTube, chances are you’re getting that data from a server near you, even if it’s not near the firms’ major data centres.

L4S has no control over the physical latency. However, it may prevent a significant amount of extra latency from being introduced on top of it.

So, when will I get it?

This is the great topic in networking, especially after IPV6, an improvement to the way computers discover one other on the internet, took a decade to implement. So here’s the bad news: L4S isn’t widely used in the field just yet.

However, several notable personalities are participating in its development. When we spoke with White from CableLabs, he claimed that there are already approximately 20 cable modems that support it, and that numerous ISPs such as Comcast, Charter, and Virgin Media have taken part in events to evaluate how prerelease gear and software operate with L4S. Companies such as Nokia, Vodafone, and Google have also attended, indicating that there is some interest.

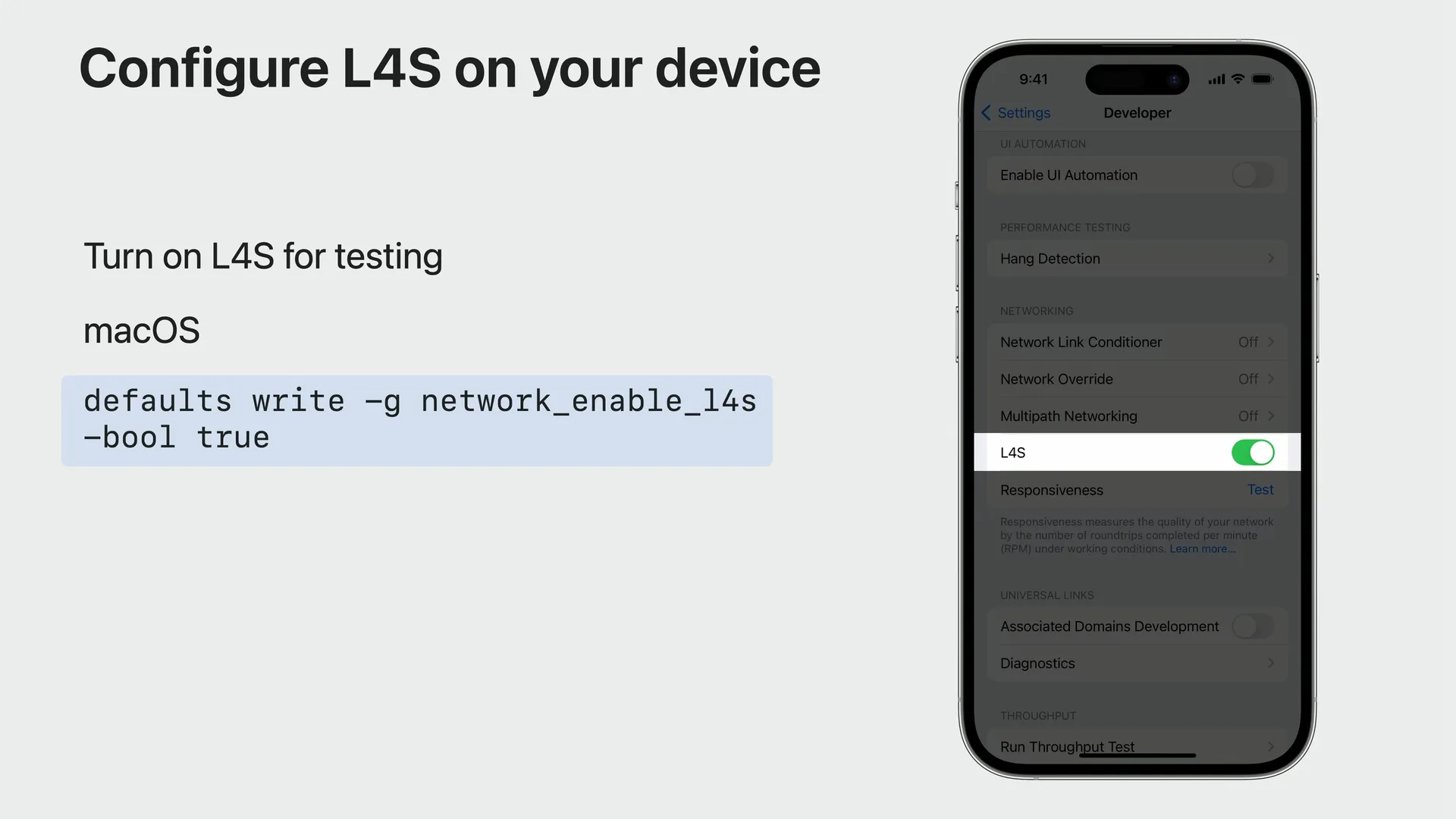

Apple emphasised L4S considerably more at WWDC 2023 after releasing beta support for it in iOS 16 and macOS Ventura. This video illustrates how, when developers utilise current frameworks, L4S support is immediately incorporated in without any code changes. Apple is gradually handing out L4S to a random sample of iOS 17 and macOS Sonoma users, while developers may enable it for testing.

Comcast announced the industry’s first L4S field experiments in conjunction with Apple, Nvidia, and Valve around the same time as WWDC. Customers in the trial markets with suitable hardware, such as the Xfinity 10G Gateway XB7 / XB8, Arris S33, or Netgear CM1000v2 gateway, may now experience it.

According to Jason Livingood, Comcast’s vice president of technology policy, product, and standards (and the person whose tweets first brought L4S to our attention), “Low Latency DOCSIS (LLD) is a key component of the Xfinity 10G Network” that includes L4S, and the company has learned a lot from the trials that it can use to implement tweaks next year as it prepares for an eventual launch.

To use L4S you need an OS, router, and server that supports it

To utilise L4S, you must have an operating system, router, and server that support it.

Another advantage of L4S is that it is largely compatible with current congestion control technologies. Traffic utilising it and previous protocols may coexist without negatively impacting each other’s experiences, and because it is not an all-or-nothing proposition, it can be phased in. That is far more likely to occur than a repair that would have everyone making a substantial modification at the same time.

Still, a lot of work has to be done before your next Zoom call can be nearly latency-free. For it to make a difference, not every hop in the network must support L4S, but the ones that are often bottlenecks must. (In the United States, this generally means your Wi-Fi router or the links in your “access network,” which is the equipment you use to connect to your ISP and that your ISP uses to connect to everyone else, according to White.) It also matters on the other end; the servers you’re connected to must support it as well.

Individual applications shouldn’t have to adjust much to enable it, especially if they delegate dealing with networking details to your device’s operating system. (However, it presupposes your operating system also supports L4S, which isn’t always the case.) Companies who create their own networking code for optimal performance, on the other hand, would very certainly have to update it to enable L4S — but given the potential advantages, it would almost certainly be worthwhile.

Of course, we’ve seen other promising technology fail to materialise, and it may be difficult to overcome the chicken-and-egg dilemma that might arise early in the development lifecycle. Why would network operators bother supporting L4S when there is no internet traffic using it? And, if no network operators support it, why should the apps and services that generate that traffic bother with it?

How can I determine whether L4S will improve my internet?

That’s an excellent question. The main signal will be how much delay you already have in your daily life. As previously stated, ping is occasionally used to quantify latency, but simply determining your average ping will not tell you the complete story. What matters is how high your ping is when your network is stressed and where it surges.

Fortunately, several speed test applications are beginning to display this information. Ookla provided a more accurate overview of latency to Speedtest, one of the most popular tools for determining how fast your internet is, in May 2022. To see it, run a test, then select “detailed result,” then scroll down to the “responsiveness” column. while I completed one, it informed me my ping was 17 while there was nothing else going on, which sounds quite good.

However, during the download test, when I was actually using my connection, it spiked as high as 855 milliseconds — that’s nearly an entire second, which would feel like an eternity if I were, say, waiting for a webpage to load, especially if it’s multiplied several times during the communication’s round trips.

(Anyone who has used dial-up is welcome to tell me how soft I am and to reminisce about the days when every webpage took 10 seconds to load, uphill both ways.)

If you only ever do one thing on the internet at a time and utilise sites that almost no one else uses, L4S may not be useful to you when it eventually arrives. However, that is not a realistic scenario. If we can get the technology onto our increasingly crowded home networks, which we use to browse the same sites as everyone else, there’s a chance it will be a quiet revolution in the web’s user experience. And once the majority of people have it, people may begin designing apps that would not be possible without ultra-low latency.